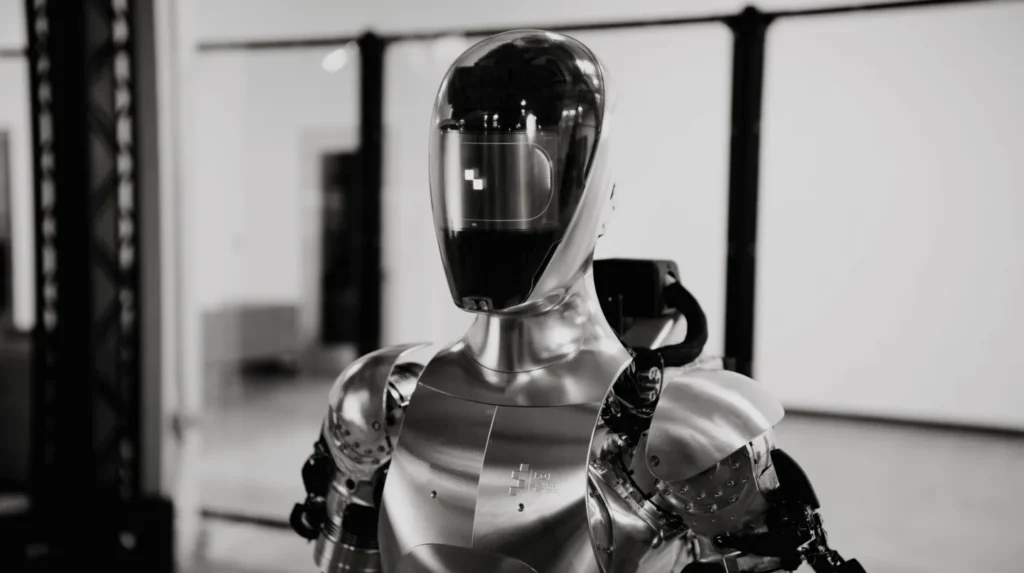

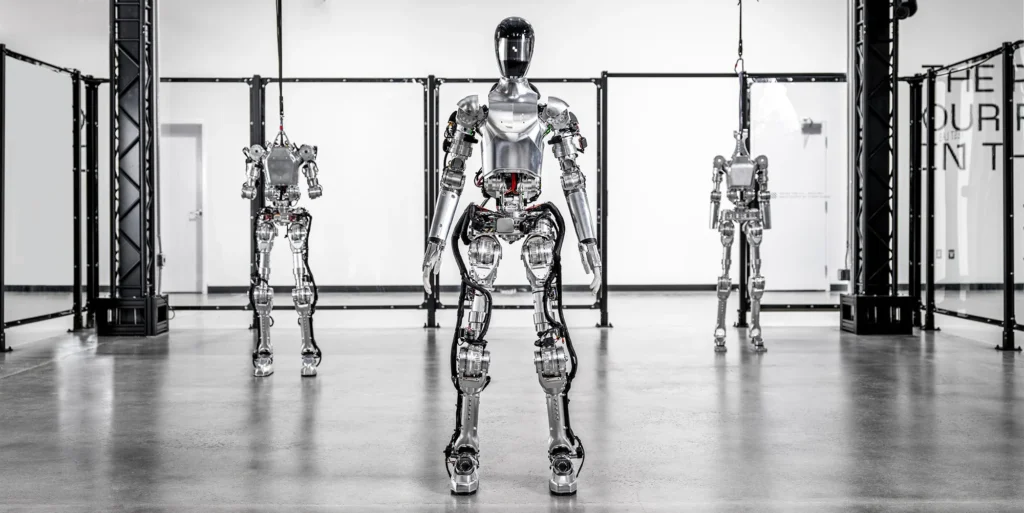

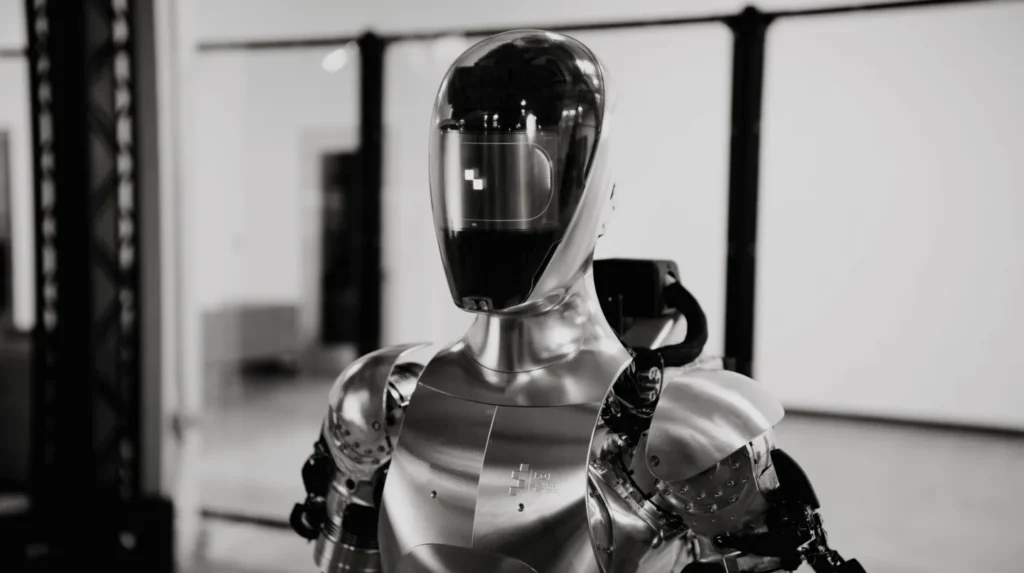

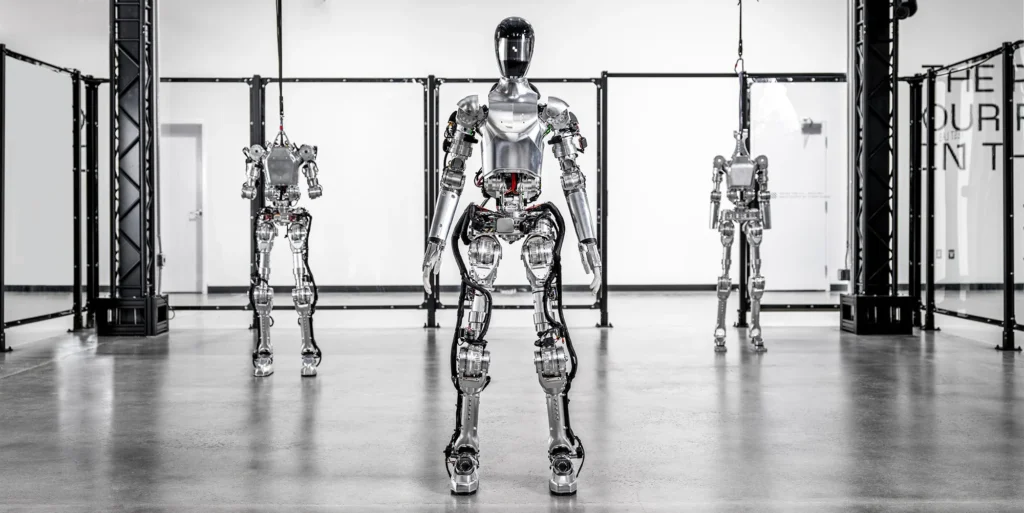

AI robotics company Figure has partnered with OpenAI to develop an impressive humanoid robot that is capable of having full conversations with people in real-time.

“OpenAI models provide high-level visual and language intelligence-Figure neural networks deliver fast, low-level, dexterous robot actions,” Figure shared on Wednesday on X.

With OpenAI, Figure 01 can now have full conversations with people

— Figure (@Figure_robot) March 13, 2024

-OpenAI models provide high-level visual and language intelligence

-Figure neural networks deliver fast, low-level, dexterous robot actions

Everything in this video is a neural network: pic.twitter.com/OJzMjCv443

The video, which has more than 2 million views on X, showcases the robot engaging in conversation as it describes to Senior AI Engineer Corey Lynch what it sees.

“I see a red apple on a plate in the center of the table, a drying rack with cups and a plate, and you standing nearby with your hand on the table,” the robot tells Lynch at the beginning of the video.

The robot, named Figure 01, proceeds to hand Lynch an apple when he asks for something to eat. Additionally, it describes to the engineer its behavior in a human-like tone.

Lynch broke down the technology on X, saying, “We feed images from the robot’s cameras and transcribed text from speech captured by onboard microphones to a large multimodal model trained by OpenAI that understands both images and text.”

“The model processes the entire history of the conversation, including past images, to come up with language responses, which are spoken back to the human via text-to-speech,” he added.

Let's break down what we see in the video:

— Corey Lynch (@coreylynch) March 13, 2024

All behaviors are learned (not teleoperated) and run at normal speed (1.0x).

We feed images from the robot's cameras and transcribed text from speech captured by onboard microphones to a large multimodal model trained by OpenAI that… pic.twitter.com/DUkRlVw5Q0

He also shared that the robot is capable of using common sense reasoning and is capable of translating ambiguous messages like “I’m hungry” to “hand the person an apple.”

The engineer explained that the robot has a powerful short-term memory. “Consider the question, “Can you put them there?” What does “them” refer to, and where is “there”? Answering correctly requires the ability to reflect on memory,” he said on X.

A large pretrained model that understands conversation history gives Figure 01 a powerful short-term memory.

— Corey Lynch (@coreylynch) March 13, 2024

Consider the question, "Can you put them there?" What does "them" refer to, and where is "there"? Answering correctly requires the ability to reflect on memory.

With a… pic.twitter.com/rNnsh5hF1V

Many social media users were confused by the robot’s speech pattern, as it is very similar to a real human. “It seems fishy at about 52 seconds in, the robot says, “it’s the only ah edible item.” The “ah” seems like something a human would add in,” said one user.

It seems fishey at about 52 seconds in the robot says "it's the only ah edible item". The "ah" seems like something a human would add in.

— ➖🤖➖Dojobot➖🤖➖ (@KentNinneman) March 14, 2024

It's still very impressive.

Another user said, “Interesting that the robot has ums and ahs like a real human… unless it is a real human doing what Google did “to inspire developers”?”

“So I gave you the apple because it’s the only — uhhh — edible item I could provide you with from the table”

— Vikas Saini (@DrVikasSaini) March 13, 2024

Interesting that the robot has ums and ahs like a real human… unless it is a real human doing what Google did “to inspire developers”?

Lynch explained the robot’s speech pattern on X, saying it is “fully synthesized speech, output by a neural network.”

The video got mixed reactions on the social media platform, with many saying it’s impressive and others saying it’s scary and doubting its abilities.

“Why is it only doing tasks when the camera is in a front shot and not back shots from behind it as well when doing tasks?” said one user.

Why is it only doing tasks when the camera is in a front shot and not back shots from behind it as well when doing tasks?

— apollo (@apollomarcellus) March 14, 2024

The guy asks a questions, shows a brief camera angle of behind the robot and the guy, and then pans back to a front shot when it does the task or says…

“Finally… good things are yet to come. Can’t wait to buy this robot. Well done, team,” shared another user.

Finally it happenings good things are yet to come can’t wait to buy this Robot well done team

— Waheed Rafiq (@WaheedRafiq16) March 13, 2024

Figure announced earlier this month that it raised around ¥99 billion yen ($675 million) in funding from major tech companies, such as Microsoft and Nvidia.

OpenAI is an AI research organization known for releasing ChatGPT.